- OpenAI and Google ask US government to allow AI to train on copyright materials.

- Urge adoption of copyright exemptions for ‘national security.’

OpenAI and Google are pushing the US government to allow AI models to train on copyrighted material, arguing that ‘fair use’ is critical for maintaining the country’s competitive edge in artificial intelligence.

Both companies outlined their positions in proposals submitted this week in response to a request from the White House for input on President Donald Trump’s “AI Action Plan.”

OpenAI’s national security argument

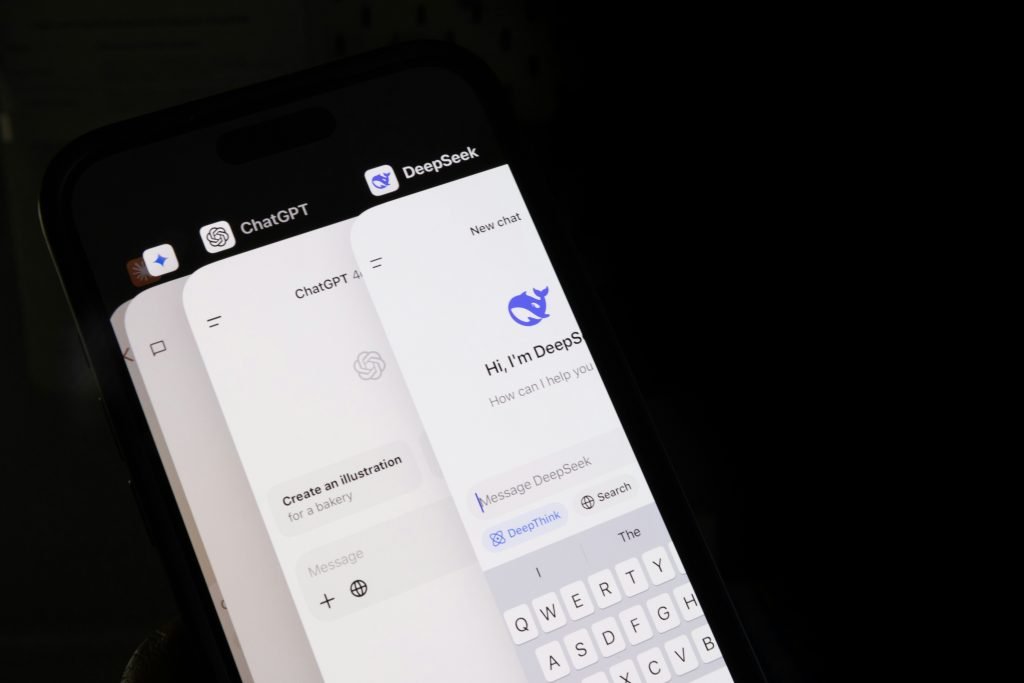

According to OpenAI, allowing AI companies to use copyrighted material for training is a national security issue. The company warned that if US firms are restricted from accessing copyrighted data, China could outperform the US in AI development.

OpenAI specifically highlighted the rise of DeepSeek as evidence that Chinese developers have unrestricted access to data, including copyrighted material. “If the PRC’s developers have unfettered access to data and American companies are left without fair use access, the race for AI is effectively over,” OpenAI stated in its filing.

Google’s position on copyright and fair use

Google supported OpenAI’s stance, arguing that copyright, privacy, and patent laws could create barriers to AI development if they restrict access to data.

The company highlighted that fair use protections and text and data mining exceptions have been crucial for training AI models using publicly available content. “These exceptions allow for the use of copyrighted, publicly available material for AI training without significantly impacting rightsholders,” Google said. Without these protections, developers could face “highly unpredictable, imbalanced, and lengthy negotiations” with data holders during model development and research.

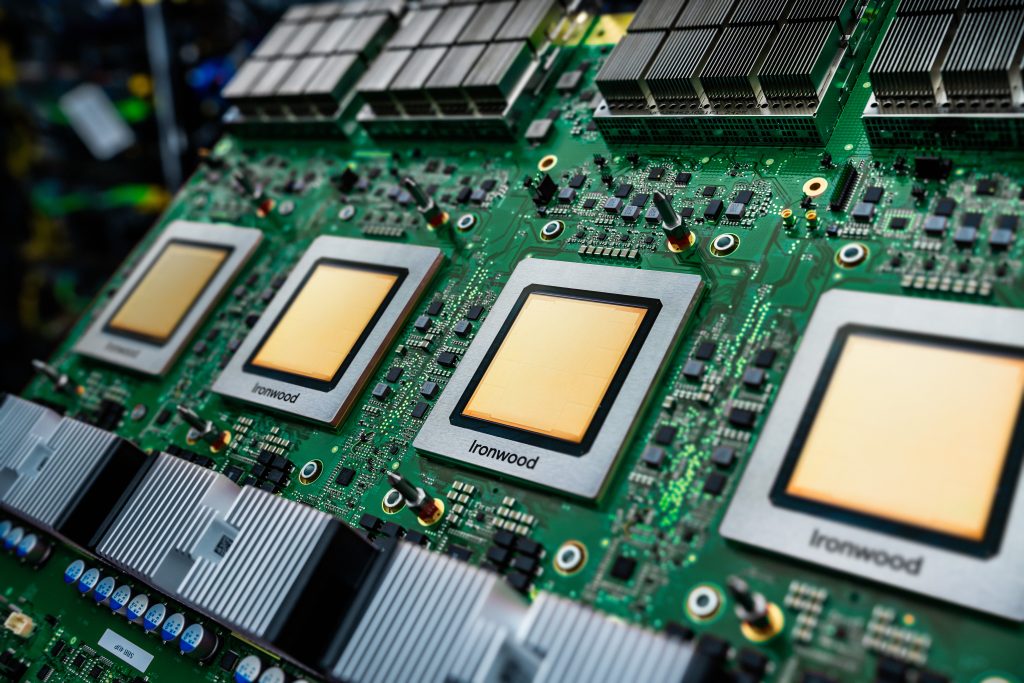

Google also revealed a broader strategy to strengthen the US’s competitiveness in AI. The corporation called for increased investment in AI infrastructure, including addressing rising energy demands and establishing export controls to preserve national security while supporting AI exports to foreign markets.

It emphasised the need for collaboration between federal and local governments to support AI research through partnerships with national labs and improving access to computational resources.

Google recommended the US government take the lead in adopting AI, suggesting the implementation of multi-vendor AI solutions and streamlined procurement processes for emerging technologies. It warned that policy decisions will shape the outcome of the global AI race, urging the government to adopt a “pro-innovation” approach that protects national security.

Anthropic’s focus on security and infrastructure

Anthropic, the developer of the Claude chatbot, also submitted a proposal but did not add to the statements on copyright. Instead, the company called on the US government to create a system for assessing national security risks tied to AI models and strengthen export controls on AI chips. It also urged investment in energy infrastructure to support AI development, pointing out that AI models’ energy demands will continue to grow.

Copyright lawsuits and industry concerns

The proposals come as AI companies face increasing legal challenges over the use of copyrighted material. OpenAI is currently dealing with lawsuits from major news organisations, including The New York Times, and from authors like Sarah Silverman and George R.R. Martin. These cases allege that OpenAI used content, without permission, to train its models.

Other AI firms, including Apple, Anthropic, and Nvidia, have also been accused of using copyrighted material. YouTube has claimed that these companies violated its terms of service by scraping subtitles from its platform to train AI models in a remarkable instance of the pot calling the kettle black.

Industry pressure to clarify copyright rules

AI developers worry that restrictive copyright policies could disadvantage US firms, as China and other nations continue to invest heavily in AI without strictures placed on use of materials. Content creators and rightsholders disagree, claiming that AI businesses should not be able to use their work without fair compensation.

The White House’s AI Action Plan is expected to set the foundation for future US policy on AI development and data access, with potential implications for both the technology sector and content industries.

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.