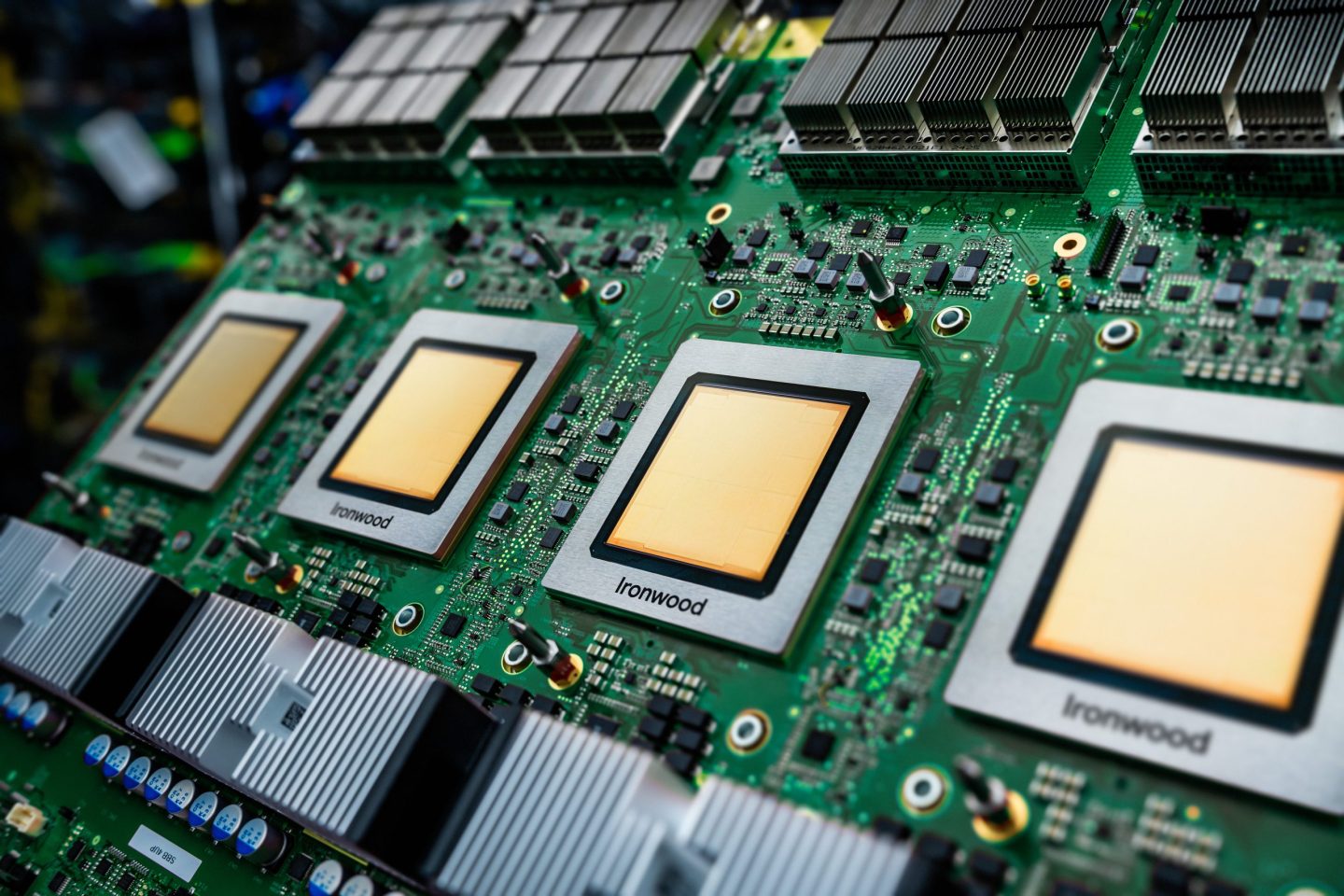

- Google’s Ironwood TPU is purpose-built for AI inference.

- Designed to support high-demand applications like LLMs and MoE models.

Google has introduced Ironwood, its seventh-generation Tensor Processing Unit (TPU) at Google Cloud Next 2025. The processor unit is specifically designed to support large-scale inference workloads.

The chip marks a shift in focus from training to inference, reflecting broader changes in how AI models are used in production environments. TPUs have been a core part of Google’s infrastructure for several years, powering internal services and customer applications. Ironwood continues with enhancements for the next wave of AI applications – including large language models (LLMs), Mixture of Experts (MoEs), and other compute-intensive tools that require real-time responsiveness and scalability.

Inference takes centre stage

Ironwood is designed to support what Google calls the “age of inference,” in which AI systems interpret and generate insights actively, rather than just responding to inputs. The shift is reshaping how AI models are deployed, particularly in business use, where continuous, low-latency performance is important.

Ironwood represents a number of architectural upgrades: Each chip provides 4,614 teraflops at peak performance, supported by 192GB of high bandwidth memory and up to 7.2 terabytes per second of memory bandwidth – significantly more than in previous TPUs.

The expanded memory and throughput are to support models requiring rapid access to large datasets, like those used in search, recommendation systems, and scientific computing.

Ironwood also features an improved version of SparseCore, a component aimed at accelerating ultra-large embedding models that are often used in ranking and personalisation tasks.

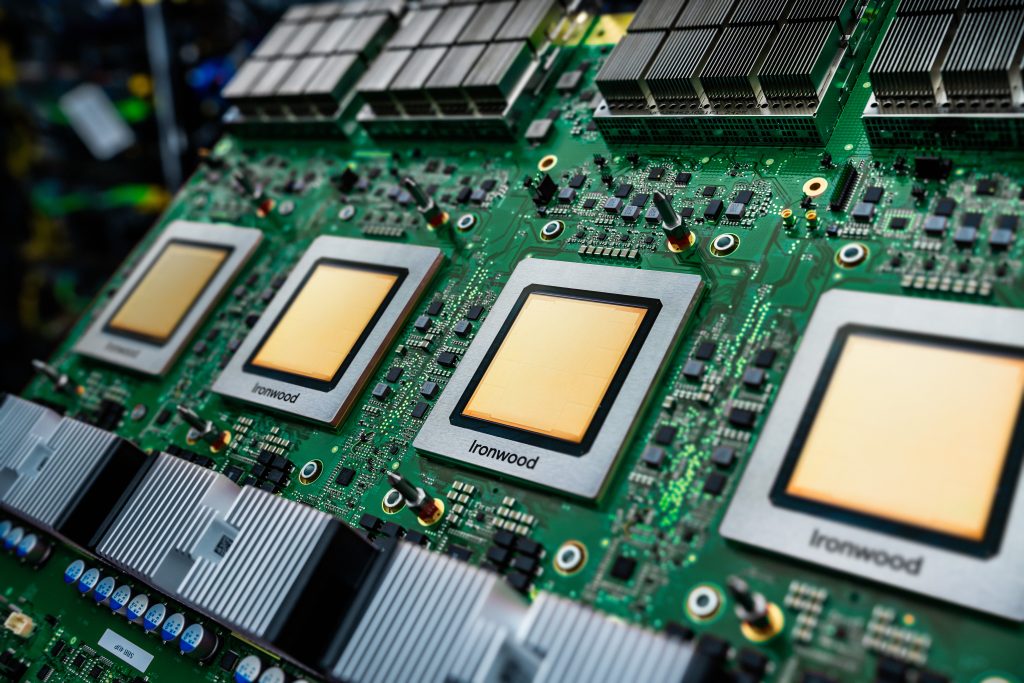

Scale and connectivity

Ironwood’s scalability means it can be deployed in configurations from 256 to 9,216 chips in a single pod. At full scale, a pod delivers 42.5 exaflops of compute, making it more than 24 times more powerful than the El Capitan supercomputer, which tops out at 1.7 exaflops.

To support this level of distributed computing, Ironwood includes a new version of Google’s Inter-Chip Interconnect, which can communicate bidirectionally at 1.2 terabits per second. This helps reduce bottlenecks so data can move more efficiently across thousands of chips during training or inference. Ironwood is integrated with Pathways, Google’s distributed machine learning runtime developed by DeepMind. Pathways allows workloads to run on multiple pods, letting developers orchestrate tens or hundreds of thousands of chips for a single model or application.

Efficiency and sustainability

Power efficiency metrics show that Ironwood has twice the performance per watt as its predecessor, Trillium, able to sustain high output under sustained workloads. The TPU has a liquid-based cooling system, and according to Google, is nearly 30 times more power-efficient than the first Cloud TPU introduced in 2018. The emphasis on energy efficiency reflects growing concerns about the environmental impact of large-scale AI infrastructure, particularly as demand continues to grow.

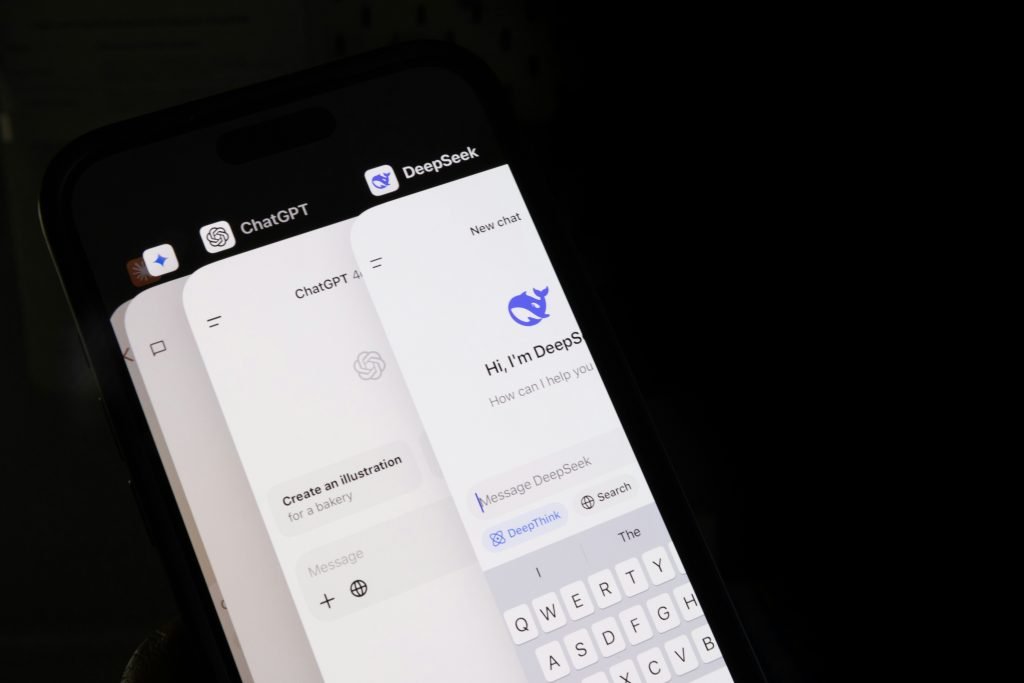

Supporting real-world applications

Ironwood’s architecture supports “thinking models,” which are used increasingly in real-time applications like chat interfaces and autonomous systems. The TPU’s capabilities also offer the potential for use in finance, logistics, and bio-informatics workloads, which require fast, large-scale computations. Google has integrated Ironwood into its Cloud AI Hypercomputer strategy, which combines custom hardware and tools like Vertex AI.

What comes next

Google plans to make Ironwood publicly-available later this year to support workloads like Gemini 2.5 and AlphaFold, and the unit is expected to be used in research and production environments that demand large-scale distributed inference.